![new_cubli]()

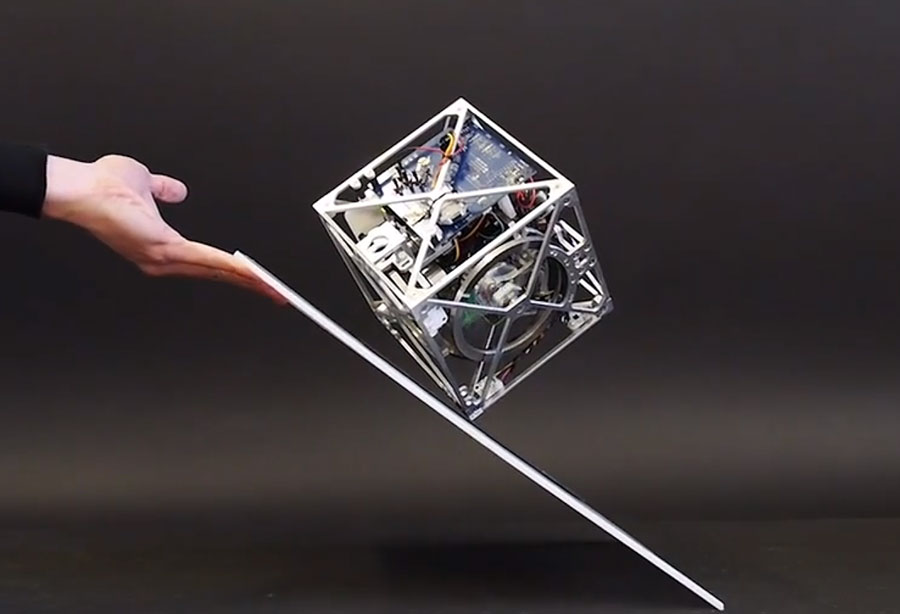

Update: New video of final robot! My colleagues at the Institute for Dynamic Systems and Control at ETH Zurich have created .

Update

This latest version of the Cubli can jumping up, balanc, and even “walk”. This new version is self contained with respect to power and uses three slightly modified bicycle brakes instead of the metal barriers used in the previous version. We are currently developing learning algorithms that allow the Cubli to automatically learn and adjust the necessary parameters if a jump fails due to the deterioration of the brakes and changes in inertia, weight, or slope of the surface.

This robot started with a simple idea:

Can we build a 15cm sided cube that can jump up, balance on its corner, and walk across our desk using off-the-shelf motors, batteries, and electronic components?

There are multiple ways to keep a cube in its balance, but jumping up requires a sudden release of energy. Intuitively momentum wheels seemed like a good idea to store enough energy while still keeping the cube compact and self-contained.

Furthermore, the same momentum wheels can be used to implement a reaction-torque based control algorithm for balancing by exploiting the reaction torques on the cube’s body when the wheels are accelerated or decelerated.

Can this work?

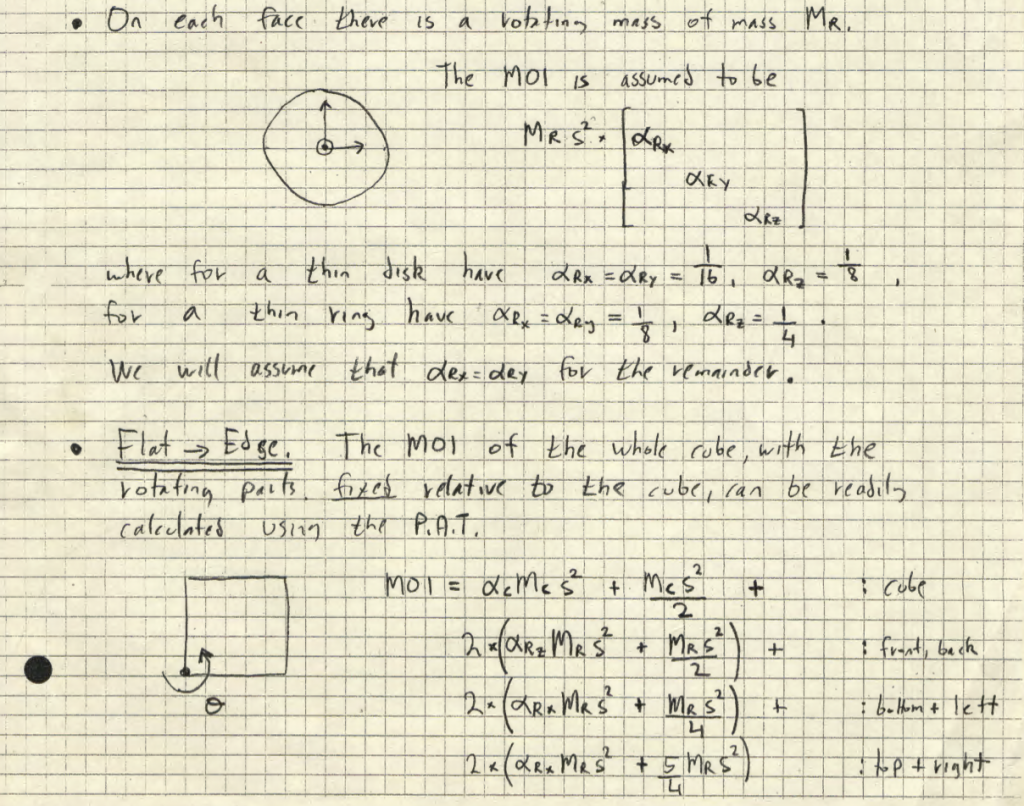

The first step in creating the robot, therefore, was to look at the physics to figure out if a jump-up based on momentum wheels was possible. The image below shows some of the math to figure out the Moment of Inertia (MOI) of the wheel and full cube.

![RaffNotes_Cubli]()

This mathematical analysis allowed a quantitative understanding of the system which allowed to inform design choices, such as the trade offs of using three momentum wheels vs. a design with a momentum wheel mounted on each of the six inner faces of the cube.

Another outcome of this analysis was a good understanding of the required velocities of the momentum wheel to allow the cube to jump up, and the torques required to keep the cube in balance. Both factors were critical for the next steps: Determining the required hardware specs.

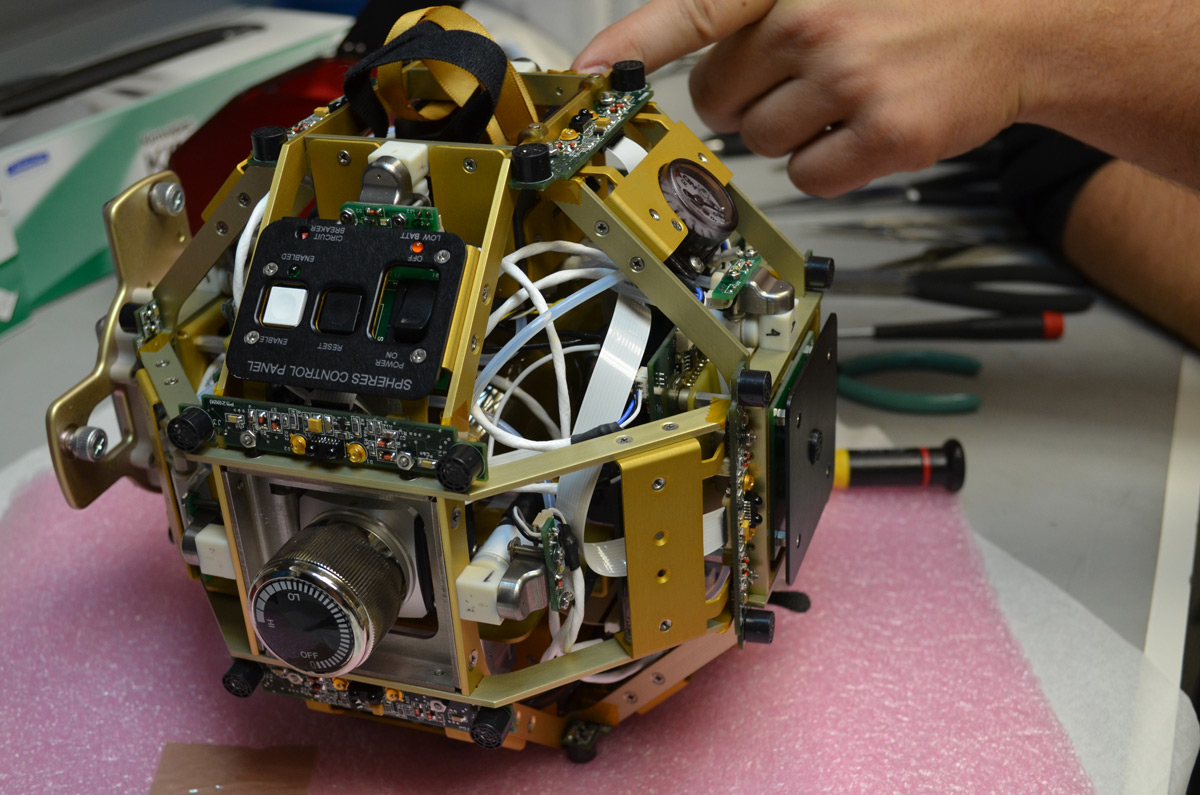

Specs and hardware design

Given the required velocities and torques determined above, it was clear that the momentum wheel’s motor and gearbox would be a major challenge for creating the robot. Using the mathematical model allowed to systematically tackle this problem by allowing a quantitative analysis of the trade-offs between higher velocities (i.e., more energy for jump-up) and higher torques (i.e., better stability when balancing).

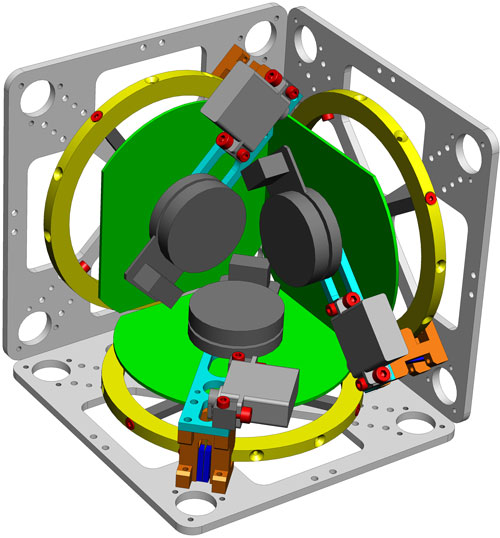

This mathematics-driven hardware design resulted in detailed specs for the robot’s core hardware components (momentum wheels, motors, gears, and batteries) and allowed a CAD design of the entire system.

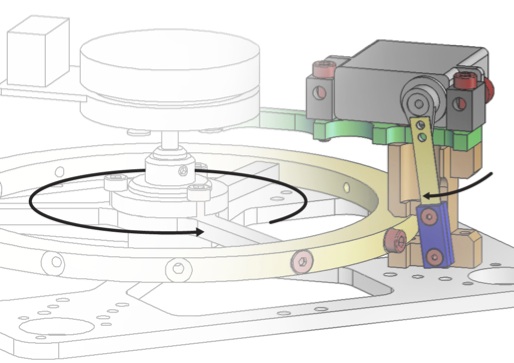

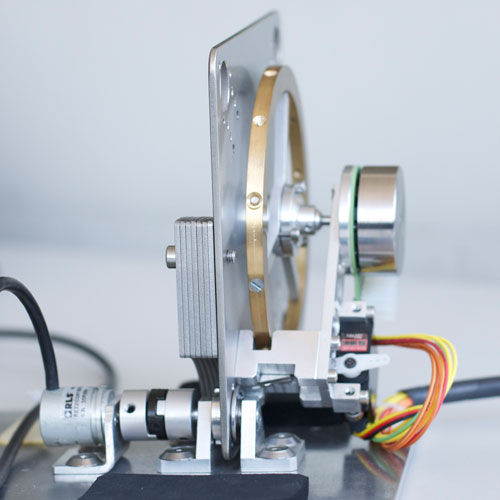

Part of this step was the design of a special brake to suddenly stop a momentum wheel to transfer its energy to the entire cube and cause it to jump up.

![Cubli_IROS2012_Page_3_Image_0001]()

The photo to the left shows an early design of this brake, consisting of a screw mounted on the momentum wheel, a servo motor (shown in black) to move a metal plate (in blue) into the screw’s path (in light brown), and a mounting bracket (in light brown) to transfer the momentum wheel’s energy to the cube structure. The current design uses a combination of hardened metal parts and rubber to reduce peak forces.

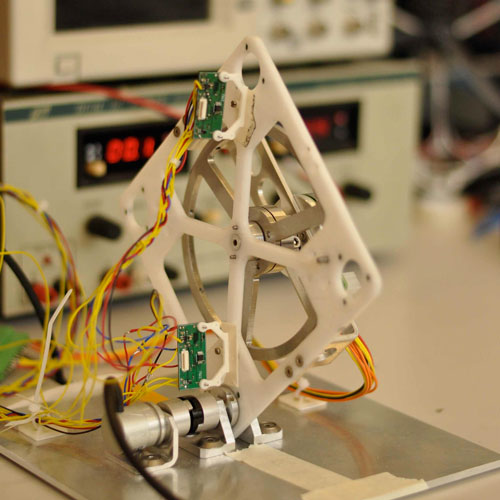

2D prototype

To validate the mechanics and electronics of the jump-up and balancing strategy and prove feasibility of the overall concept a one-dimensional version was built:

The results obtained with this 2D version of the cube were published in an IROS 2012 paper.

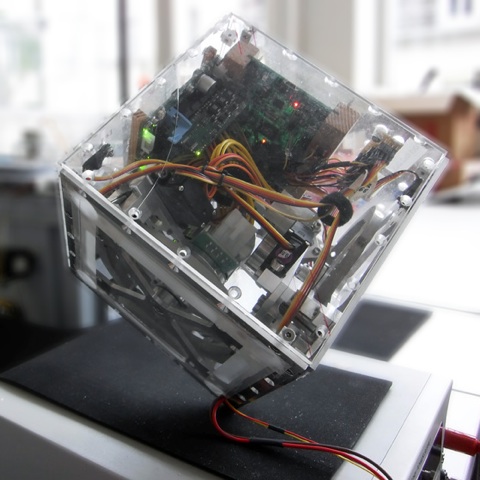

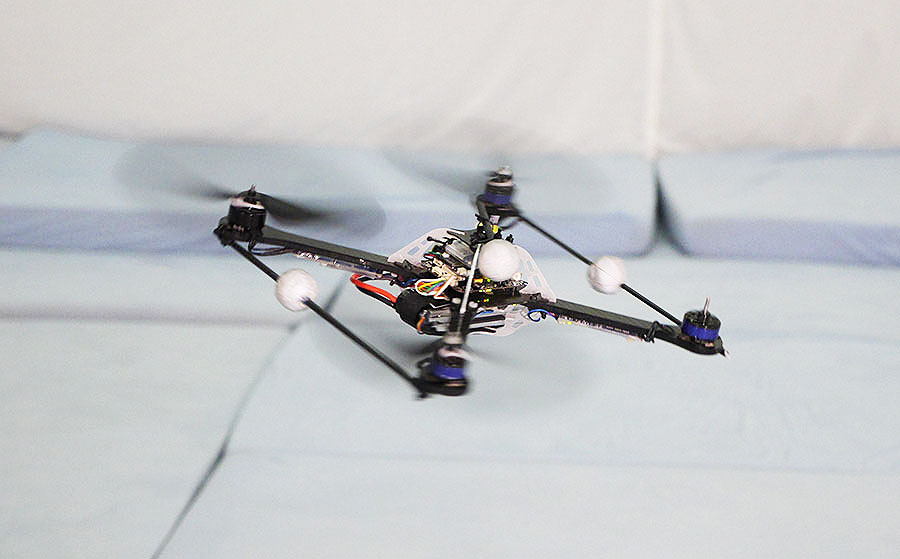

The final robot

Following successful tests with the 2D version, a full robot was built. The result is Cubli, a small cube-shaped robot, named after the Swiss-German diminutive for “cube”.

As you can see in the video, Cubli can balance robustly.

However, first jump-up tests showed that the stress resulting from a sudden braking of the momentum wheel led to mechanical deformations of the momentum wheels and aluminium frame. This made repeated jump-ups of the whole Cubli impossible without part replacement. It was therefore decided to tweak the structure and breaking mechanism to reduce the mechanical stress caused by the jump-up.

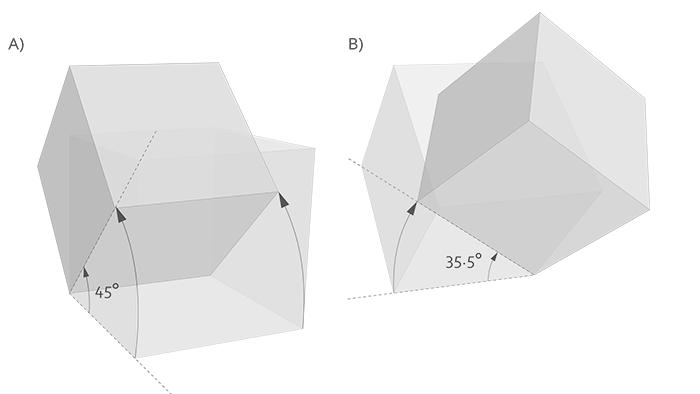

In addition to balancing, my colleagues are now investigating the use of controlled manoeuvres of jumping up, balancing, and falling over to make the Cubli walk across a surface.

![Cubli - standing up]()

Note 1: This post is part of our Swiss Robots Series. If you’d like to submit a robot to this series, or to a series for another country, please get in touch at info[@]robohub.org.

Note 3: If you have questions, post them below and we’ll post answers.

Some more photos:

![Cubli-Balancing-Robot]()

![Cubli_IROS2012_Page_5_Image_0001]()

![Cubli_IROS2012_Page_5_Image_0002]()

![Swiss Robots - Cubli]()

Thanks Gajan!

If you liked this article, you may also be interested in:

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

Peter Corke lives in Brisbane with his wife and a cat. By day he’s a professor at Queensland University of Technology. By night he maintains two open-source toolboxes, one for robotics and one for vision. His interests include robotics, computer vision, embedded systems, control and networking. He worked on robotic systems for mining, aerial and underwater applications. You can learn more about his work and what he wanted to be when he grew up in this interview.

Peter Corke lives in Brisbane with his wife and a cat. By day he’s a professor at Queensland University of Technology. By night he maintains two open-source toolboxes, one for robotics and one for vision. His interests include robotics, computer vision, embedded systems, control and networking. He worked on robotic systems for mining, aerial and underwater applications. You can learn more about his work and what he wanted to be when he grew up in this interview.

Research labs around the world have been focused on developing novel assistive technologies such as wheelchairs, exoskeletal and mechatronic devices to help patients with spinal chord injuries in their daily life activities. There is still a long way to go before these kinds of devices will enable complete movement for these patients.

Research labs around the world have been focused on developing novel assistive technologies such as wheelchairs, exoskeletal and mechatronic devices to help patients with spinal chord injuries in their daily life activities. There is still a long way to go before these kinds of devices will enable complete movement for these patients. Robert Riener is Full Professor for Sensory-Motor Systems at the Department of Health Sciences and Technology, ETH Zurich. He has been Assistant Professor for Rehabilitation Engineering at ETH Zurich since May 2003. In June 2006 he was promoted to the rank of an Associate Professor and in June 2010 to the rank of a Full Professor. As he holds a Double-Professorship with the University of Zurich, he is also active in the Spinal Cord Injury Center of the Balgrist University Hospital (Medical Faculty of the University of Zurich).

Robert Riener is Full Professor for Sensory-Motor Systems at the Department of Health Sciences and Technology, ETH Zurich. He has been Assistant Professor for Rehabilitation Engineering at ETH Zurich since May 2003. In June 2006 he was promoted to the rank of an Associate Professor and in June 2010 to the rank of a Full Professor. As he holds a Double-Professorship with the University of Zurich, he is also active in the Spinal Cord Injury Center of the Balgrist University Hospital (Medical Faculty of the University of Zurich).