![Cocoro]()

The EU-funded Collective Cognitive Robotics (CoCoRo) project comprises the largest autonomous underwater swarm in the world. Following three-and-a-half years of intensive research, CoCoRo’s interdisciplinary team of scientists from universities in Austria, Italy, Germany, Belgium, and the UK built a swarm of 41 autonomous underwater vehicles (AUVs) that show collective cognition. Swarm members are not only aware of their own surroundings, but also of their own and other vehicles’ experiences. Throughout 2015 – The Year of CoCoRo – the research team will be uploading a new weekly video detailing a new stage in its development and reporting updates here on Robohub. Check out the first ten videos below!

Overview of the CoCoRo system

The swarm consists of Jeff robots (the highlight of the project), 20 smaller (and slower) Lily robots, as well as a base station at the surface. The setting is shown in a short overview video.

In this simple form of collective self-awareness, the swarm processes information collectively, such that, as a whole it knows more than any single swarm member. The swarm not only collects information about the environment, but also about its own state. As well as staying together as a swarm, it’s also capable of navigating and diving, searching for sunken targets and communicating and processing information as a group.

Research highlights

The swarm communicates findings via a self-established bridge to the “world above”

Not only can the swarm interact with itself, it can also communicate its findings and inner state to the world above. It does this by establishing, self-maintaining and even repairing a bridge between the swarm, located on the sea bed, and a human-controlled floating station that is located on the water’s surface.

During the project, many algorithms were developed and tested with the CoCoRo prototype swarm. Future applications include environmental monitoring and oceanic search missions.

Three-layer decentralized swarm design

When designing the swarm, the scientists decided to follow the KISS principle — Keep it Simple and Stupid. A decentralized approach was chosen for the CoCoRo project because in a swarm there is no single point of failure. Swarms are robust, flexible and scalable systems that can adapt easily to changing environments. Additionally, the technical and cost requirements for a single robot are less than in non-swarm systems.

Three different layers were implemented for the cognition: individual, group and global. Single AUVs collect information, local groups share and compare it and, finally, the whole swarm makes collective decisions.

Bio-inspired algorithms build core functions

While designing the software, the scientists decided to focus on bio-inspired algorithms because they are known to be very flexible and robust. The focal animals were slime mold, fireflies, honeybees, cockroaches or fish. The algorithms were programmed in a modular way; self-organizing cognition algorithms were merged with self-organizing motion algorithms that were inspired by various animals. In this way, totally new algorithms emerged.

Three types of AUVs communicate via a novel multichannel underwater network

A swarm of 41 prototype AUVs was built for the CoCoRo project, consisting of three different types of AUVs that range in size from a human hand to a man’s foot. Depending on their activity, the robots run from two to six hours without required a charge. A unique feature of the CoCoRo project is that sensors were implemented in a combination never used before in underwater robotics. These combinations were necessary to allow the construction of a autonomous underwater swarm that can coordinate mainly through simple signalling between nearest neighbors. In this way, a totally new heterogeneous underwater swarm system was created, consisting of three different types of AUVs: “Jeff” robots that search the sea bed; a base station on the water’s surface (connected to humans); and “Lilly” robots that bridge and communicate information between the Jeff robots and the base station.

Check out our other videos below!

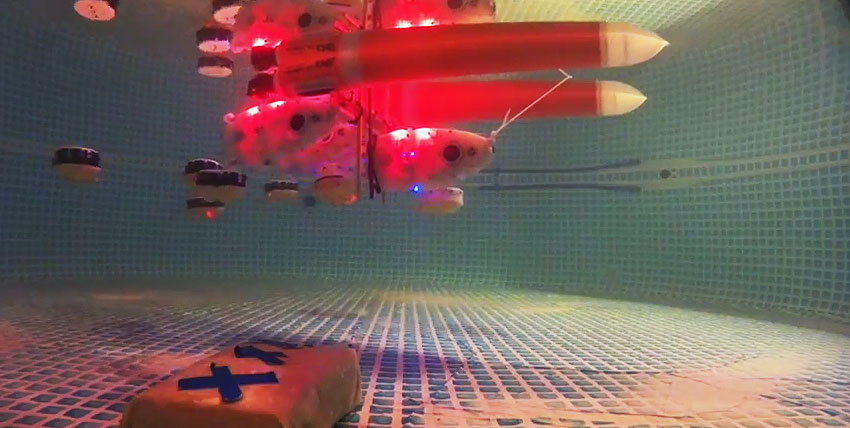

Introducing Jeff

The Jeff robots are extremely agile and can resist water currents of 1m/sec. They have autonomous buoyancy control, lateral and vertical motion with optimized propellers and a rich set of sensors. For all these functions, novel energy-saving methods were implemented that guarantee autonomy and durability.

Jeff in turbulent waters

Jeff’s body shape and actuation also allows it to operate in turbulent waters, as is tested in the following video with a remote-controlled Jeff. In this video it was purposely driven into the most turbulent areas to see how it would be affected by the water currents.

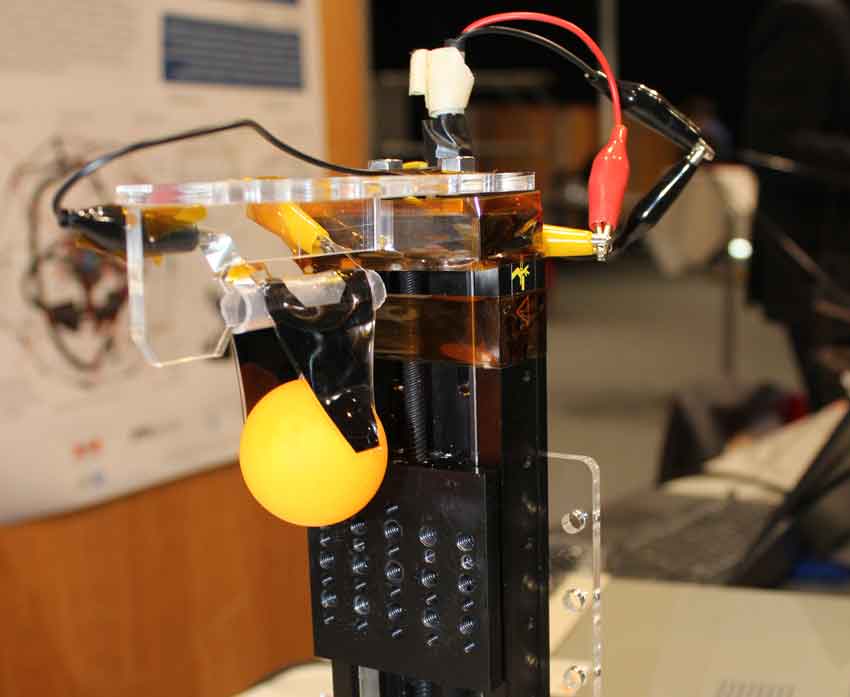

Feeding Jeff with magnets

Even in turbulent water, Jeff can be controlled with enough precision to reach a specific point in 3D space. This is demonstrated by holding a small magnet in the water for Jeff to pick up.

The Jeff swarm explores its habitat

The researchers have produced 20 Jeff robots, able to swarm out and search complex habitats in parallel, as shown in the following video.

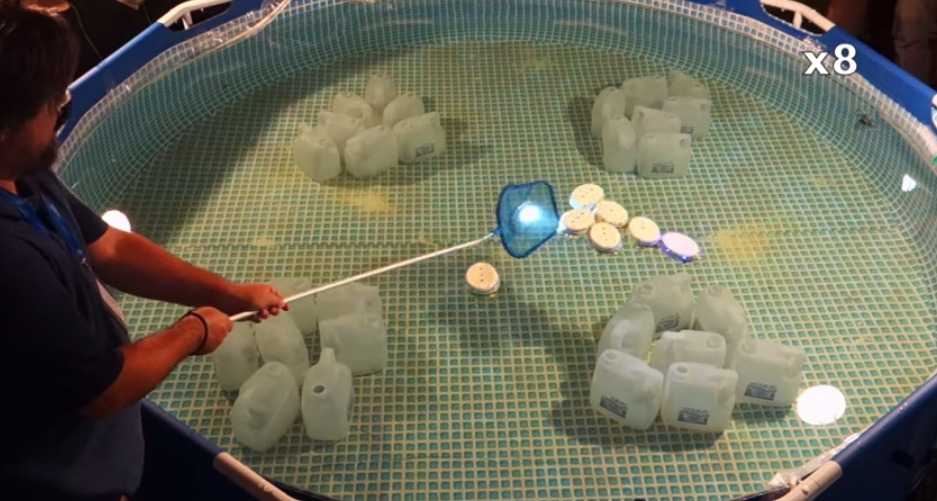

Collective search by Jeff and Lily Robots

An important aspect of the project is that all three types of robots help each other in the performance of their collective task. To achieve this, the scientists took inspiration from nature and combined several algorithms to generate a new collective program for the whole swarm. An example of this is their collective search for a magnetic (metallic) target on the sea ground, shown in a pool-based scenario. In this setting, a target is marked by a metal plate and some magnets. Several blocking objects, surrogates for debris and rocks, produce a structured habitat. The Jeff robots, on the ground, first have to spot the target by doing a search and observing their magnetic sense. After the first robot finds the target, it attracts others through the use of blue-light signals. The aggregation of Jeff robots then summons a swarm of Lily robots at higher water levels, which serve to pinpoint the site to humans.

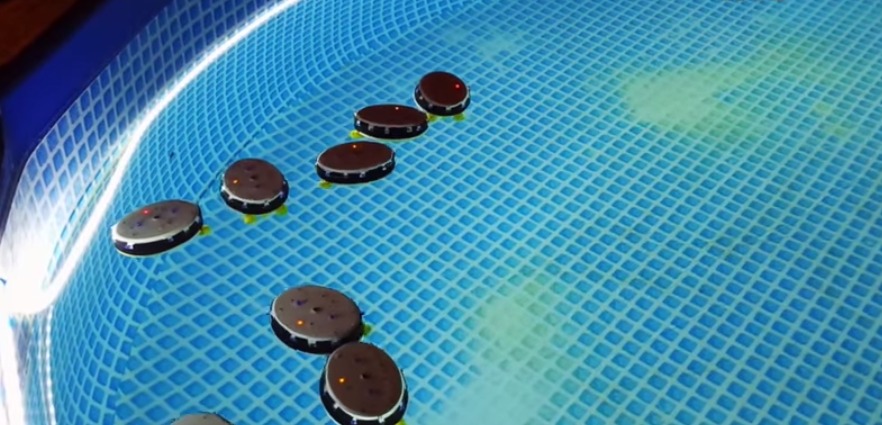

Swarm-size awareness exhibited by Lily robots

It’s important for a swarm of robots to know its own size. In CoCoRo, this was achieved by a novel algorithm inspired by slime mold amoebas; in this case, however, the chemical signals amoebas exchange were substituted in the algorithm for blue-light blinks. These are transmitted by the robots to their neighbors and propagated to other robots in a wave-like manner. This behavior was first tested on the smaller Lily robots.

Swarm-size awareness exhibited by Jeff robots

After the algorithm was successfully tested on Lily robots, it was further polished to improve its reaction speed and prediction quality before it was implemented in Jeff robots, as shown by the following video.

Flocking by slime mold

The slime mold inspired algorithm doesn’t just allow the robots to know the size of the swarm. Combined with directed motion, it can also be used to generate slime mold-like flocks of underwater robots.

Emergent taxis of robots

After adding an additional environmental light sensor, this bio-mimicking collective behavior is turned into something the scientists call “emergent taxis.” When the entire swarm of robots run uphill in a light gradient, although individual members can’t read it (having only a very rough sensor impression of the local area), the swarm turns into a kind of moving compound eye, with all the robots observing their local environmental and influencing each other. Finally, an algorithm designed for counting swarm members transforms it into an acting organ, what might even be called a “super-organism”.

Outlook

The CoCoRo project developed and tested a whole set of “enabling technologies”, mostly in pools but sometimes in out-of-lab conditions. When the project ended in late 2014, it was clear to the research team that these technologies should be further developed and brought out of the lab. As a next step in their long-term roadmap, the research team (with additional partners) started a new project called “subCULTron” to develop the next generation of swarm robots and apply them in areas of high impact: fish and mussel farms and the Venice lagoon.

New footage of CoCoRo available on YouTube each week

During the runtime of CoCoRo, the swarm developed an enormous variety of functionalities, which were presented in several demonstrators at the project’s final review. A lot of footage was recorded and many videos were produced. These films not only give a deep insight into the different features of the CoCoRo swarm as it evolved, but also provide a behind-the-scenes glimpse into the scientific work and development that went into the project. The CoCoRo team will be uploading a new video on YouTube each week until the end of the year to celebrate the “The Year of CoCoRo” – stay tuned on Robohub for regular updates.

Links

CoCoRo Homepage

CoCoRo on Youtube

CoCoRo on Facebook

If you liked this article, you may also be interested in:

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

Livestream of the Council of Foreign Relations’ Malcolm and Carolyn Wiener Annual Lecture on Science and Technology starting 02/27/2015 at 12:45 EST.

Livestream of the Council of Foreign Relations’ Malcolm and Carolyn Wiener Annual Lecture on Science and Technology starting 02/27/2015 at 12:45 EST.

The EU-funded Collective Cognitive Robotics (CoCoRo) project has built a swarm of 41 autonomous underwater vehicles (AUVs) that show collective cognition. Throughout 2015 –

The EU-funded Collective Cognitive Robotics (CoCoRo) project has built a swarm of 41 autonomous underwater vehicles (AUVs) that show collective cognition. Throughout 2015 –

As part of the Center for Information Technology Policy (CIPT) Luncheon

As part of the Center for Information Technology Policy (CIPT) Luncheon