Having noticed a recent trend towards robotics companies releasing videos with high production values, this one caught my eye. Aldebaran Robotics is hiring, and produced an edgy video called Shape the World to call attention to that fact.

Aldebaran’s Shape the World recruiting video

2012 (2nd annual) Robot Film Festival

The 2012 (2nd annual) Robot Film Festival screening took place nine days ago, in the 3LD Art & Technology Center (New York City). Selected entries appear on the Robot Film Festival website, and Automaton has put together a highlights video.

Robot video roundup: Gangnam style

What’s better than a selection of fun robot videos to brighten up the week? This week’s videos range from the sublime of “How to engineer a dog” and “Flying copters shooting hoops” to the ridiculously funny of “Bioloids Gangnam Style” and the HP/DEC combo of BD594 performing “Moves Like Jagger”. And no week is complete without cocktails, brought to you by ‘The Inebriator’ robot bartender.

DARPA Robotics Challenge update | IEEE Spectrum

Dr. Gill Pratt gave a keynote address at IROS and talked about the DARPA Robotics Challenge, including a new video of the Boston Dynamics ATLAS

See on spectrum.ieee.org

Drone on YOMYOMF

YOMYOMF is one of a long, and growing, list of YouTube funded channels. Drone is a four-part series of short-format videos, telling the story of a humanoid combat robot that encounters a situation which causes it to refuse an order, and all that transpires as a result. YOMYOMF packs a complex story complete with a dash of character development into a combined run length less than half that of the typical feature film. All four episodes, a couple of trailers, and a “The Making of Drone” behind the scenes segment have all been combined into a playlist, or you can start with episode 1 and pick your way through.

Making an iCub robot “clever”

As part of the IM-CLeVeR project, the IDSIA robotics laboratory recently released a video-overview of their work on the technologies, architectures and algorithms required to give an iCub robot more human-like abilities. This includes the ability to reason about and manipulate its local environment, and in the process, to acquire new skills that can be applied to future problems.

The iCub robot is an open-source humanoid platform developed by the Italian Institute of Technology and used by over 20 robotics laboratories worldwide in research areas such as human-robot interaction, computer reasoning, motion planning and “socially aware robotics”.

Some of these we’ve covered on Robohub before: From iCub to artist and iCub drums and crawls using bio-inspired control.

The IM-CLeVeR project was launched in 2009 and uses the iCub as a platform to support the goal of:

Developing a new methodology for designing robots that can cumulatively learn new skills through autonomous development based on intrinsic motivations, and reuse such skills for accomplishing multiple, complex, and externally-assigned tasks.

In the following 13 minute video, researchers from the IDSIA laboratory, an IM-CLeVeR project-partner, describe motivations, challenges and successes in fields such as computer vision, motion planning, “reflex” behaviors and reinforcement learning.

For more information on the project, consult the laboratory’s other videos and technical releases, or visit the IM-CLeVeR homepage.

A list of iCub related projects can be found here.

Robot bootcamp episode 2: Controlllers | Trossen Robotics

Great video over at Trossen Robotics about various brains for your robot!

Robot Bootcamp is our newest series and a great place to get started in the world of robotics.

In Episode 2, Andrew shows off the wide world of robot controllers. From the Arduino to the Raspberry Pi, Andrew will go over trade offs of different controllers and when you might want to use each board.0:31 – Robotis Controllers

1:25 – Microcontrollers

4:51 – Phidgets & Netduino

6:04 – SBCs & Embedded Computers

Most US drones openly broadcast secret video feeds | Danger Room | Wired.com

Four years after discovering that militants were tapping into drone video feeds, the U.S. military still hasn’t secured the transmissions of more than half of its fleet of Predator and Reaper drones, Danger Room has learned.

See on www.wired.com

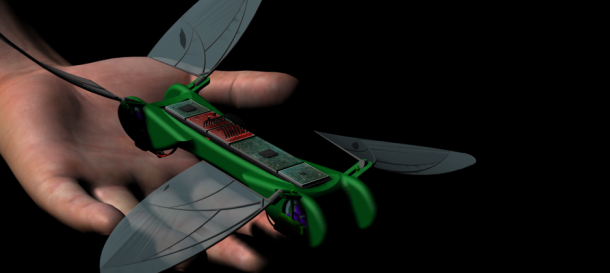

Robotic dragonflies take to the sky (with your help)

Is it a bird? Is it a plane? No, it’s the Robot Dragonfly from TechJect. Developed over four years by researchers at the Georgia Institute of Technology for the US Air Force, the researchers are now investigating commercial and consumer opportunities through their recently released campaign on the crowd-funding website, IndieGoGo.

True consumer (as opposed to military or hobbyist) UAV’s first truly “took to the skies” with the release of Parrot’s iPhone-controlled AR.Drone in 2010, which has, for the last two years, been relatively unchallenged. Now, with the help of crowd-sourced funding, new takes on consumer UAVs are emerging, some more playful, and some more hobbyist oriented.

Few however are as innovative as the Robot Dragonfly, whose patented and bio-inspired drive/flight system gives it the ability to hover like a helicopter, and dynamically switch to a “gliding mode”, more like a conventional fixed-wing craft. As with all crowd-sourced campaigns, the Robot Dragonfly is not yet a finished or proven product, however if product simulations and current prototypes are to be believed (both shown in following video), its four wings and light weight (25g) should offer an interesting array of dynamic movements and flight possibilities not achievable with conventional UAV designs.

The following promotional video was released by the group along with the crowd-funding campaign. This video gives an overview of the Robot Dragonfly’s development at the Georgia Institute of Technology, takes a look at the existing research prototypes and shows simulations of the final version performing in a number of different situations.

The group are targeting a broad range of markets with their initial release and have suggested applications in Augmented-Reality Gaming, Aerial Photography, Telepresence, Personal and Commercial Security, and in the Military. For more information, see the Robot Dragonfly IndieGoGo campaign, where team-members are actively answering questions and responding to suggestions.

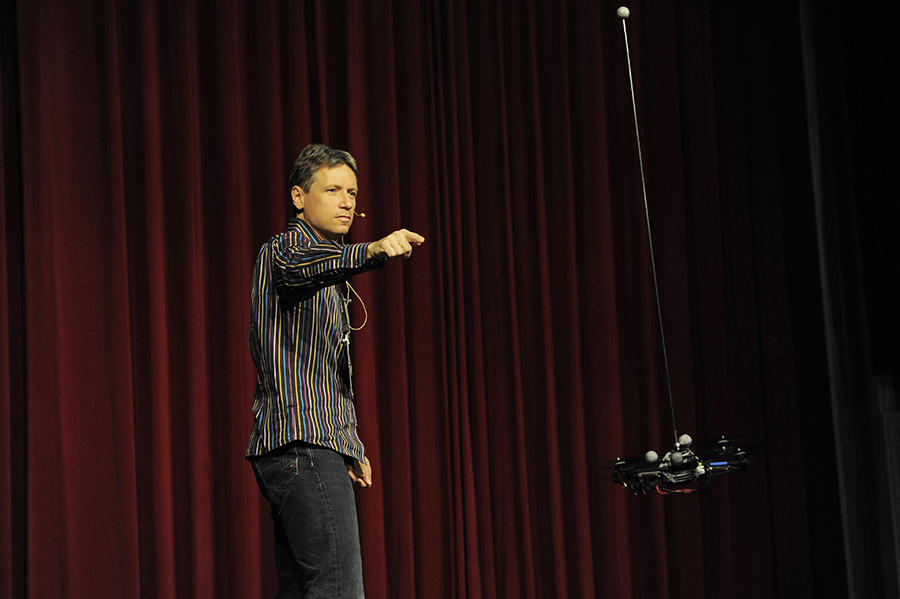

Surrounded by his quadrocopter drones on stage, Raffaello D’Andrea explains feedback control and talks about the coming Machine Revolution

During the 20 minute presentation, Raffaello D’Andrea revealed some of the key concepts behind his group’s impressive demonstrations of quadrocopters juggling, throwing and catching balls, dancing, and building structures – and illustrated them with live examples with quadrocopters flying on stage.

To watch him hurtle quadrocopters towards his audience, see them juggle balls and balance poles, and to find out what happens when control fails, check out the video:

Other speakers at the Zurich.Minds event included:

- Gerhard Schröder in an interview with host and organizer Rolf Dobelli (in German)

- Tomáš Sedláček on The Economics of Good and Evil

- Kevin Heng on Atmospheric Research of Exoplanets

- Ashkan Nikeghbali on The Fascination of Mathematics

- Lorenz Meier on the Open Hardware Revolution

- Christina Warinner on Evolutionary Diet

- Robert Cialdini on Influence

- Urs Hölzle on Where the Internet Lives, The Hidden Life of Data Centers

- Simone Schürle on Nano Robots for Medicine

- Lars Kolind on Unboss! The new leadership

- John Gray on The Dangers of Faith in Progress, and

- Benjamin Beilmann playing Prokofjew: Sonata for violin solo in D major, Op. 115 on his violin

Full disclosure: I, my colleagues from the Flying Machine Arena, and Raffaello D’Andrea all work in his group at the Institute for Dynamic Systems and Control at ETH Zurich.

Here are some photos of the event:

Photos: Zurich.Minds 2012

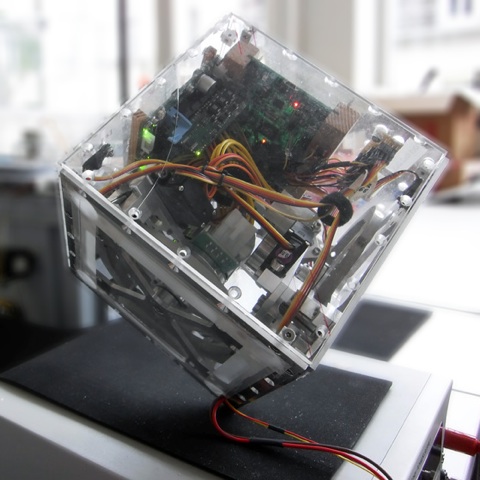

Cubli – A cube that can jump up, balance, and – soon- walk across your desk

My colleagues at the Institute for Dynamic Systems and Control at ETH Zurich have created a small robotic cube that can autonomously jump up and balance on any one of its corners.

This robot started with a simple idea:

Can we build a 15cm sided cube that can jump up, balance on its corner, and walk across our desk using off-the-shelf motors, batteries, and electronic components?

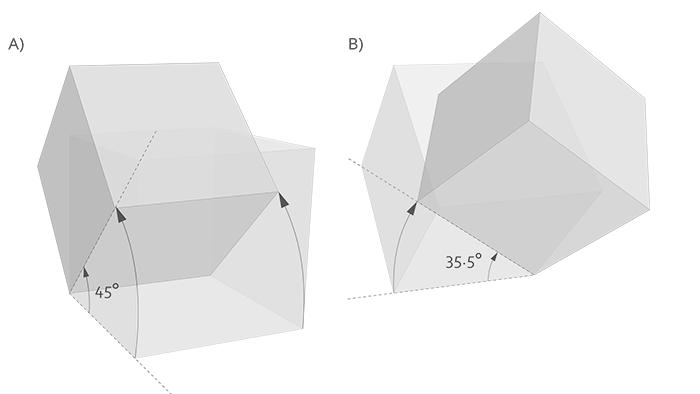

There are multiple ways to keep a cube in its balance, but jumping up requires a sudden release of energy. Intuitively momentum wheels seemed like a good idea to store enough energy while still keeping the cube compact and self-contained.

Furthermore, the same momentum wheels can be used to implement a reaction-torque based control algorithm for balancing by exploiting the reaction torques on the cube’s body when the wheels are accelerated or decelerated.

Can this work?

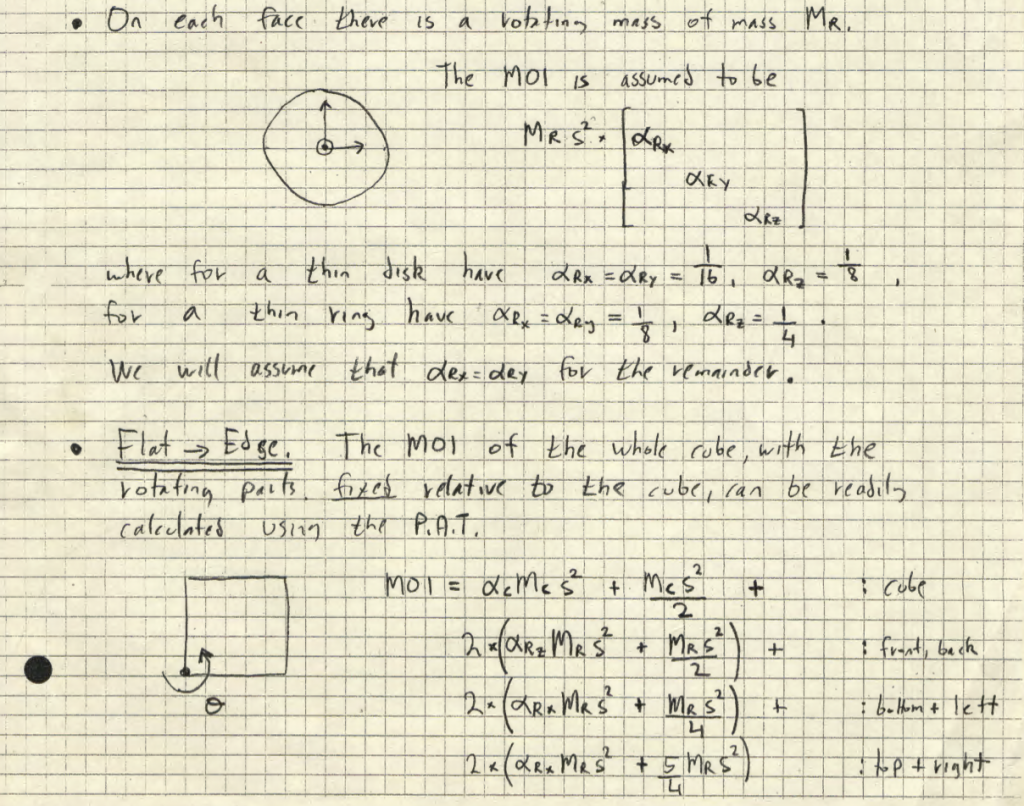

The first step in creating the robot, therefore, was to look at the physics to figure out if a jump-up based on momentum wheels was possible. The image below shows some of the math to figure out the Moment of Inertia (MOI) of the wheel and full cube.

This mathematical analysis allowed a quantitative understanding of the system which allowed to inform design choices, such as the trade offs of using three momentum wheels vs. a design with a momentum wheel mounted on each of the six inner faces of the cube.

Another outcome of this analysis was a good understanding of the required velocities of the momentum wheel to allow the cube to jump up, and the torques required to keep the cube in balance. Both factors were critical for the next steps: Determining the required hardware specs.

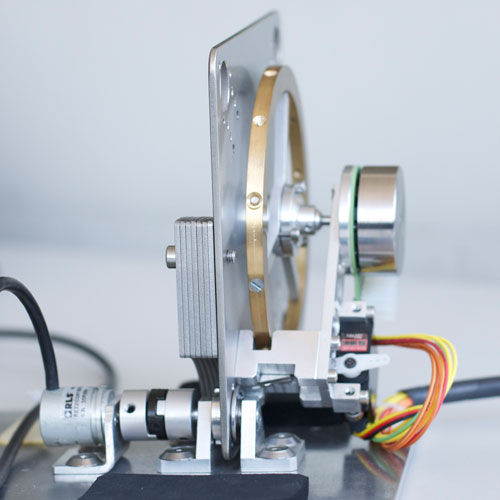

Specs and hardware design

Given the required velocities and torques determined above, it was clear that the momentum wheel’s motor and gearbox would be a major challenge for creating the robot. Using the mathematical model allowed to systematically tackle this problem by allowing a quantitative analysis of the trade-offs between higher velocities (i.e., more energy for jump-up) and higher torques (i.e., better stability when balancing).

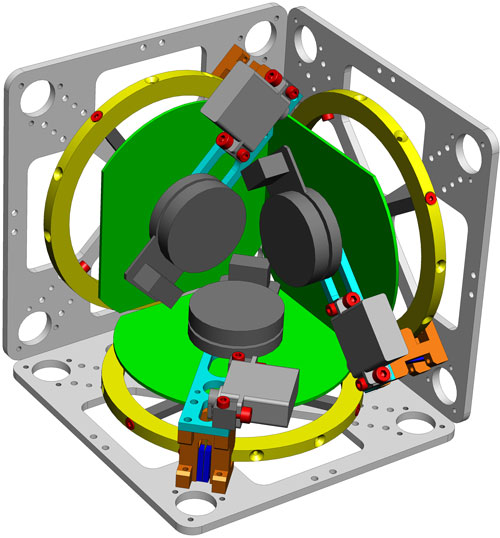

This mathematics-driven hardware design resulted in detailed specs for the robot’s core hardware components (momentum wheels, motors, gears, and batteries) and allowed a CAD design of the entire system.

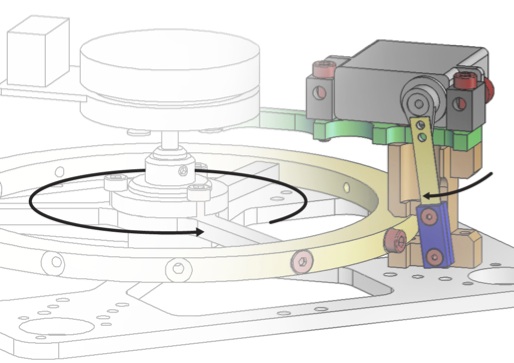

Part of this step was the design of a special brake to suddenly stop a momentum wheel to transfer its energy to the entire cube and cause it to jump up.

The photo to the left shows an early design of this brake, consisting of a screw mounted on the momentum wheel, a servo motor (shown in black) to move a metal plate (in blue) into the screw’s path (in light brown), and a mounting bracket (in light brown) to transfer the momentum wheel’s energy to the cube structure. The current design uses a combination of hardened metal parts and rubber to reduce peak forces.

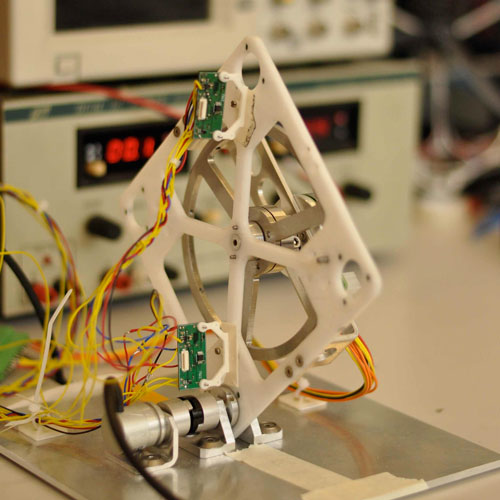

2D prototype

To validate the mechanics and electronics of the jump-up and balancing strategy and prove feasibility of the overall concept a one-dimensional version was built:

The results obtained with this 2D version of the cube were published in an IROS 2012 paper.

The final robot

Following successful tests with the 2D version, a full robot was built. The result is Cubli, a small cube-shaped robot, named after the Swiss-German diminutive for “cube”.

As you can see in the video, Cubli can balance robustly.

However, first jump-up tests showed that the stress resulting from a sudden braking of the momentum wheel led to mechanical deformations of the momentum wheels and aluminium frame. This made repeated jump-ups of the whole Cubli impossible without part replacement. It was therefore decided to tweak the structure and breaking mechanism to reduce the mechanical stress caused by the jump-up.

In addition to balancing, my colleagues are now investigating the use of controlled manoeuvres of jumping up, balancing, and falling over to make the Cubli walk across a surface.

Robot name: Cubli

Researchers: Mohanarajah Gajamohan, Raffaello D’Andrea

Mechanical design: Igor Thommen

Websites: http://www.idsc.ethz.ch/Research_DAndrea/Cubli, http://raffaello.name/cubli

Status: Ongoing research project

Last update: March 2013

Note: This post is part of our Swiss Robots Series. If you’d like to submit a robot to this series, or to a series for another country, please get in touch at info[@]robohub.org.

Thanks Gajan!

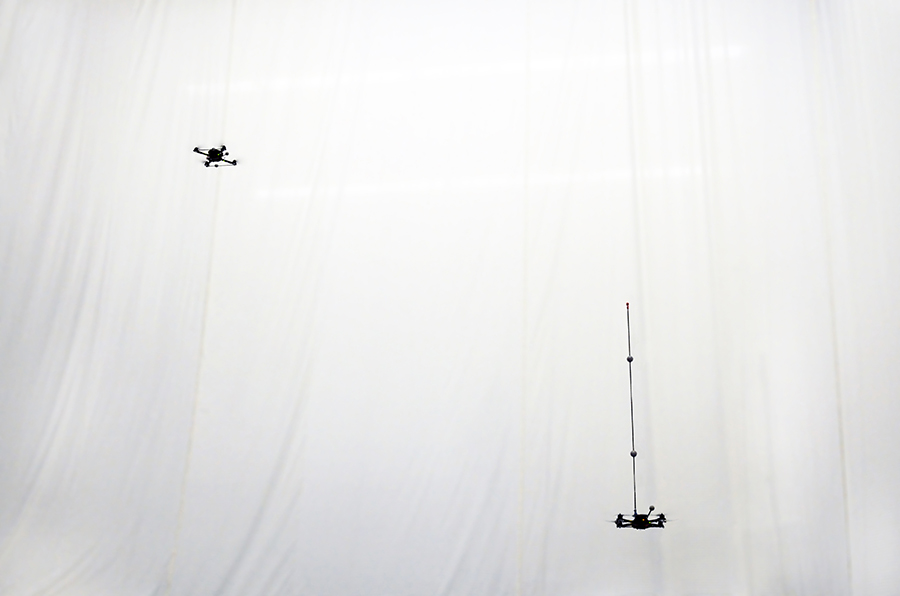

Video: Throwing and catching an inverted pendulum – with quadrocopters

Two of the most challenging problems tackled with quadrocopters so far are balancing an inverted pendulum and juggling balls. My colleagues at ETH Zurich’s Flying Machine Arena have now combined the two.

As part of his Master thesis Dario Brescianini, student at ETH Zurich’s Institute for Dynamic Systems and Control, has developed algorithms that allow quadrocopters to juggle an inverted pendulum. If you are not sure what that means (or how that is even possible), have a look at his video “Quadrocopter Pole Acrobatics”:

(Don’t miss the shock absorber blowing up in smoke at 1:34!)

The Math

A quadrocopter with a plate for balancing the pole. The cross-shaped cut-outs are used for easy attachment to the vehicle and have no influence on the pendulum’s stability.

To achieve this feat, Dario and his supervisors Markus Hehn and Raffaello D’Andrea started with a 2D mathematical model. The goal of the model was to understand what motion a quadrocopter would need to perform to throw the pendulum. In other words, what is required for the pendulum to lift off from the quadrocopter and become airborne?

This first step allowed to determine (theoretical) feasibility. In addition, it showed the ideal trajectory in terms of positions, speeds, and angles the quadrocopter needed to follow to throw a pendulum. And it offered an insight into the throwing process, including identification of its key design parameters.

Reality Checks

The main goal of the next step was to determine how well the theoretic model described reality: How well does the thrown pendulum’s motion match the mathematical prediction? Does the pendulum really leave the quadrocopter at the pre-computed time? How does the pendulum behave while airborne? How well do assumptions for catching the pendulum (e.g., completely inelastic collisions, completely rigid pendulum, infinite friction between quadrocopter and pendulum when balancing) hold?

This second step involved multiple tests with the physical system, including throwing the pendulum by hand to study its aerodynamic properties and precisely timing the quadrocopters’ and pendulum’s motions during the maneuver.

Analyze, Experiment, Repeat

The shock absorber at the end of the pendulum is a balloon filled with flour and attached to a sliding metal cap with zip ties.

Armed with a good theoretical model and knowledge of its strengths and limitations, the researchers set out on a process of engineering the complete system of balancing, throwing, catching, and re-balancing the pendulum. This involved leveraging the theoretic insights on the problem’s key design parameters to adapt the physical system. For example, they equipped both quadrocopters with a 12cm plate that could hold the pendulum while balancing and developed shock absorbers to add at the pendulum’s tips.

This also involved bringing the insights gained from their initial and many subsequent experiments to bear on their overall system design. For example, a learning algorithm was added to account for model inaccuracies.

Dario writes:

This project was very interesting because it combined various areas of current research and many complex questions had to be answered: How can the pole be launched off the quadrocopter? Where should it be caught and – more importantly – when? What happens at impact?

The biggest challenge to get the system running was the catching part. We tried various catching maneuvers, but none of them worked until we introduced a learning algorithm, which adapts parameters of the catching trajectory to eliminate systematic errors.

The long and iterative process of this third step resulted in the final successful architecture to repeatedly throw and catch the pendulum on the real system, including three key components:

First, a state estimator was used to accurately predict the pendulum’s motion while in flight. Unlike the ball used in the group’s earlier demonstration of quadrocopter juggling, the pendulum’s drag properties depend on its orientation. This means, among other things, that a pendulum in free fall will move sideways if oriented at an angle. Since experiments showed that this effect was quite large for the pendulum used, an estimator including a drag model of the pendulum was developed.

This was important to accurately estimate the pendulum’s catching position.

Another task of the estimator was to determine when the pendulum was in free flight and when it was in contact with a quadrocopter. This was important to switch the quadrocopter’s behavior from hovering to balancing the pendulum.

Second, a fast trajectory generator was needed to quickly move the catching quadrocopter to the estimated catching position.

Third, a learning algorithm was implemented to correct for deviations from the theoretical models for two key events: A first correction term was learnt for the desired catching point of the pendulum. This allowed to capture systematic model errors of the throwing quadrocopter’s trajectory and the pendulum’s flight. A second correction term was learnt for the catching quadrocopter’s position. This allowed to capture systematic model errors of the catching quadrocopter’s rapid movement to the catching position.

The Result

As you can see in the video embedded above, at the end of Dario’s thesis two quadrocopters could successfully throw and catch a pendulum.

Many of the key challenge of this work were caused by the highly dynamic nature of the demonstration. For example, the total time between a throw and a catch is a mere 0.65 seconds, which is a very short time to move to, and come to full rest at, a catching position.

Another key challenge was the demonstration’s high cost of failure: a failed catch typically resulted in the pendulum hitting a rotor blade, with very little chance for the catching quadrocopter to recover. A crashed quadrocopter not only entailed repairs (e.g., changing a propeller), but also meant recalibration of the vehicle to re-determine its operating parameters (e.g., actual center of mass, actual thrust produced by propellors) and restarting the learning algorithms.

Says Markus Hehn:

This was a really fun project to work on. We started off with some back-of-the-envelope calculations, wondering whether it would even be physically possible to throw and catch a pendulum. This told us that achieving this maneuver would really push the dynamic capabilities of the system.

As it turned out, it is probably the most challenging task we’ve had our quadrocopters do. With significantly less than one second to measure the pendulum flight and get the catching vehicle in place, it’s the combination of mathematical models with real-time trajectory generation, optimal control, and learning from previous iterations that allowed us to implement this.

Note: The Flying Machine Arena is an experimental lab space equipped with a motion capture system.

Full disclosure: I work with Dario Brescianini, Markus Hehn, and Raffaello D’Andrea at ETH Zurich’s Institute for Dynamic Systems and Control.

More photos:

Credits: Carolina Flores, ETH Zurich 2012

ShanghAI Lectures 2012: Lecture 1 “Intelligence – An eternal conundrum”

In this first part of the ShanghAI Lecture series, Rolf Pfeifer gives an overview of the content and scope of the project, discusses the meaning of “Intelligence”, the Turing Test, and IQ.

The ShanghAI Lectures are a videoconference-based lecture series on Embodied Intelligence run by Rolf Pfeifer and organized by me and partners around the world. The name goes back to the first lectures of the series in 2009, which were held from Shanghai Jiao Tong University in China.

The lectures roughly follow Rolf Pfeifer’s book “How the Body Shapes the Way We Think” (co-authored with Josh Bongard). Additional information, including information on publications and the series’ sponsors can be found at the ShanghAI Lectures website, which also provided a support framework to bring together students and researchers in an interactive setting during the semester.

Starting with this post, recordings of these lectures and guest presentations given as part of the ShanghAI Lectures are now made available on Robohub.

Related links:

ShanghAI Lectures 2012: Lecture 2 “Cognition as computation”

In this 2nd part of the ShanghAI Lectures, Rolf Pfeifer looks at the paradigm “Cognition as Computation”, show its successes and failures and justifies the need for an embodied perspective. Following Rolf Pfeifer’s class, there are two guest lectures by Christopher Lueg (University of Tasmania) on embodiment and information behavior and Davide Scaramuzza (AI Lab, University of Zurich) on autonomous flying robots.

The ShanghAI Lectures are a video conference-based lecture series on Embodied Intelligence run by Rolf Pfeifer and organized by me and partners around the world.

Christopher Lueg: Embodiment and Scaffolding Perspectives in Human Computer Interaction

In this talk Professor Lueg will discuss how embodiment and scaffolding perspectives discussed in the ShanghAI Lectures on Natural and Artificial Intelligence can also be used to look at, and re-interpret, research topics in human computer interaction ranging from human information behavior in the real world to information interaction in online communities. In his work Professor Lueg understands human computer interaction as interaction with pretty much any kind of computer-based system ranging from desktop computers and mobile phones to microwave ovens and parking meters.

Davide Scaramuzza: Vision-Based Navigation: a Ground and a Flying Robot Perspective

Over the past two decades, we have assisted to a rapid research progress in driver-assistance systems. Some of these systems have even reached the market and have become nowadays an essential tool for driving. GPS navigation systems are probably the most popular ones. They have revolutionized the way of traveling and certainly facilitated research towards fully autonomous navigation in outdoor environments. However, there are still numerous challenges that have to be solved in view of fully autonomous navigation of cars in cluttered environments. This is especially true in urban environments, where the requirements for an autonomous system are very high.

Another research area that lately received a lot of interest—especially after the earthquake in Fukushima, Japan—is that of micro aerial vehicles. Flying robots have numerous advantages over ground vehicles: they can get access to environments where humans cannot get access to and, furthermore, they have much more agility than any other ground vehicle. Unfortunately, their dynamics makes them extremely difficult to control and this is particularly true in GPS-denied environments.

In this talk, Davide Scaramuzza will present challenges and results for both ground vehicles and flying robots, from localization in GPS-denied environments to motion estimation. He will show several experiments and real-world applications where these systems perform successfully and those where their applications is still limited by the current technology.

Related links:

ShanghAI Lectures 2012: Lecture 3 “Towards a theory of intelligence”

In this lecture Rolf Pfeifer presents some first steps toward a “theory of intelligence”., followed by guest lectures by Vincent C. Müller (Anatolia College, Greece) on computers and cognition, and Alex Waibel (Karlsruhe Institute of Technology, Germany/Carnegie Mellon University, USA) who demonstrates a live lecture translation system.

The ShanghAI Lectures are a videoconference-based lecture series on Embodied Intelligence run by Rolf Pfeifer and organized by me and partners around the world.

Vincent C Müller: Computers Can Do Almost Nothing – Except Cognition (Perhaps)

The basic idea of classical cognitive science and classical AI is that if the brain is a computer then we could just reproduce brain function on different hardware. The assumption that this function (cognition) is computing has been much criticized; I propose to assume it is true and to see what would follow.

Let us take it as definitional that computing is ‘multiply realizable’: Strictly the same computing procedure can be realized on different hardware. (This is true if computing is understood as digital algorithmic procedures, in the sense of Church and Turing.) But in multiple realizations only the syntactic computational properties are retained from one realization to the other, while the physical and semantic properties may or may not be. So, even if the brain is indeed a computer, realizing it in different hardware might not have the desired effects because the hardware-dependent effects are not computational: Just computing can’t even switch on a red light; a computer model of an apple tree will not produce apples. But perhaps cognition is different. Is cognition one the properties that are retained in different realizations?

References:

- Sandberg, Anders (2013), ‘Feasibility of whole brain emulation’, in Vincent C. Müller (ed.), Theory and Philosophy of Artificial Intelligence (SAPERE; Berlin: Springer), 251-64.

- Searle, John R. (1980), ‘Minds, brains and programs’, Behavioral and Brain Sciences, 3, 417-57.

Alex Waibel: Bridging the Language Divide

Related links:

ShanghAI Lectures 2012: Lecture 4 “Design principles for intelligent systems (part 1)”

This is the fourth part of the ShanghAI Lecture series, where Rolf Pfeifer starts introducing a set of “Design Principles” for intelligent systems, as outlined in the book “How the Body Shapes the Way We Think”.

In the first guest lecture, Dario Floreano (EPFL) talks about biologically inspired flying robots, and then Pascal Kaufmann (AI Lab, UZH) gives a short overview of the “Roboy” project.

The ShanghAI Lectures are a videoconference-based lecture series on Embodied Intelligence run by Rolf Pfeifer and organized by me and partners around the world.

Dario Floreano: Bio-inspired Flying Robots

Most autonomous robots operate on the ground, essentially living in 2 dimensions. Taking robots into the 3rd dimension offers new opportunities, such as performing exploration of rough terrain with small and inexpensive devices and gathering aerial information for monitoring, security, search-for-rescue, and mitigation of catastrophic events.

However, there are several novel scientific and technological challenges in perception, control, materials, and morphologies that need to be addressed. In this talk, Dario Floreano presents the long-term vision, approach, and results obtained so far to let robots live in the 3rd dimension. Taking inspiration from nature, he starts by describing how robots could take off the ground by jumping and gliding. He then moves on to autonomous flight in cluttered environments and on the issue of perception and control for small flying systems in indoor environments. This will lead to the next step resulting in outdoor flying robots that can autonomously regulate altitude, steering, and landing using only perceptual cues. He then expands the perspective by describing how multiple robots could fly in swarm formation in outdoor environments and how these achievements could possibly lead to fleets of personal aerial vehicles in the not-so-far future. Finally, he closes the talk by going back indoor with current work on radically new concepts of flying robots that collaborate with teams of terrestrial and climbing robots and of flying robots designed to survive and even exploit collisions. Throughout the talk, Dario Floreano also emphasize bi-directional links between biology as a source of inspiration and robotics as a novel method to explore biological questions.

- J.-C. Zufferey, A. Beyeler and D. Floreano. Autonomous flight at low altitude using light sensors and little computational power, in International Journal of Micro Air Vehicles, vol. 2, num. 2, p. 107-117, 2010.

- M. Kovac, M. Schlegel, J.-C. Zufferey and D. Floreano. Steerable Miniature Jumping Robot, in Autonomous Robots, vol. 28, num. 3, p. 295-306, 2010.

- S. Hauert, J.-C. Zufferey and D. Floreano. Evolved swarming without positioning information: an application in aerial communication relay, in Autonomous Robots, vol. 26, num. 1, p. 21-32, 2009.

Pascal Kaufmann: Roboy

Related links:

ShanghAI Lectures 2012: Lecture 5 “Design principles for intelligent systems (part 2)”

This is the second part of the “Design Principles for Intelligent Systems” ShanghAI Lecture. After Rolf Pfeifer’s class, Barry Trimmer (Tufts University, USA) gives a guest presentation about soft robotics.

The ShanghAI Lectures are a videoconference-based lecture series on Embodied Intelligence run by Rolf Pfeifer and organized by me and partners around the world.

Barry Trimmer: Living Machines: Soft Animals, Soft Robots and Biohybrids

Related links:

ShanghAI Lectures 2012: Lecture 6 “Evolution: Cognition from scratch”

In this sixth part of the ShanghAI Lecture series, Rolf Pfeifer introduces the topic “Artificial Evolution” and gives examples of evolutionary processes in artificial intelligence. The first guest lecture, by Francesco Mondada (EPFL) is about the use of robots in daily life; in the second guest lecture, Robert Riener (ETH Zürich) talks about rehabilitation robots.

The ShanghAI Lectures are a videoconference-based lecture series on Embodied Intelligence run by Rolf Pfeifer and organized by me and partners around the world.

Francesco Mondada: Toward Robots For Daily Life

In a recent survey from the European Commission, 60% of the participants said that robots should be banned from the application area “care of children, elderly, and the disabled”, 34% would like to ban robots from “education”. Within this framework, what is the future of robotics in daily life services? Two research projects answering this question are presented in this talk: education using specific robotics tools and a new form of embodiment for service robotics. Some preliminary results are illustrated, showing a very interesting potential demonstrated by a high acceptance of the proposed approaches.

Robert Riener: Design Principles for Intelligent Rehabilitation Robots

Integrating the human into a robotic rehabilitation system can be challenging not only from a biomechanical view but also with regard to psycho-physiological aspects. Biomechanical integration involves ensuring that the system to be used is ergonomically acceptable and “user-cooperative”. Psycho-physiological integration involves recording and controlling the patient’s physiological reactions so that the patient receives appropriate stimuli and is challenged in a moderate but engaging way. In this talk basic design criteria are presented that should be taken into account, when developing and applying an intelligent robotic system that is in close interaction with the human subject. One must carefully take into account the constraints given by human biomechanical, physiological and psychological functions in order to optimize device function without causing undue stress or harm to the human user.

References:

- Robert Riener, Lars Lünenburger, Gery Colombo: Human-centered robotics applied to gait training and assessment, Journal of Rehabilitation Research and Development, 43(5), August/September 2006, p. 679-694.

- Alexander Koenig, Ximena Omlin, Lukas Zimmerli, Mark Sapa, Carmen Krewer, Marc Bolliger, Friedemann Müller, Robert Riener:Psychological state estimation from physiological recordings during robot-assisted gait rehabilitation, Journal of Rehabilitation Research and Development, 48(4), accepted for publication, 2011

- Robert Riener: What Has to Be Known about Human Physiology to Develop Wearable Robots? Workshop on Wearable Robotics, IEEE ICRA 2012, St. Paul, USA

Related links:

ShanghAI Lectures 2012: Lecture 7 “Collective Intelligence: Cognition from interaction”

In the 7th part of the ShanghAI Lecture series, Rolf Pfeifer talks about collective intelligence. Examples include ants that find the shortest path to a food source, robots that clean up, and birds that form flocks. In the guest lecture, István Harmati (Budapest University of Technology and Economics, Hungary) discusses the coordination of multi-agent robotic systems.

The ShanghAI Lectures are a videoconference-based lecture series on Embodied Intelligence run by Rolf Pfeifer and organized by me and partners around the world.

István Harmati: Coordination of Multi-Agent Robotic Systems

Control and coordination of multi-agent autonomous systems plays an increasing role in robotics. Such systems are used in a variety of applications including finding and moving objects, search and rescue, target tracking, target assignments, optimal military maneuvers, formation control, traffic control and robotic games (soccer, hockey). Control and coordination are often implemented at different levels. On the highest level, robot teams are given a global task to perform (e.g attacking in robot soccer). Since it is hard to find and optimal solution to such global challenges, the methods presented are based mainly on heuristics and artificial intelligence (fuzzy systems, value rules, etc). On the middle level, the individual robots are given tactics to reach a global goal. Examples include kicking the ball to the goal or occupying a strategic position in the field. On the lowest level, the robot is controlled to perform the desired behavior (specified by the strategy and the tactics). Finally, we also show the main issues and the general ideas related to the efficient coordination of multi-agent systems.

Related links:

ShanghAI Lectures 2012: Lecture 8 “Where is human memory?”

In this 8th part of the ShanghAI Lecture series, Rolf Pfeifer looks into differences between human and computer memory and shows several types of “memories”. In the first guest lecture, Vera Zabotkina (Russian State University for the Humanities) talks about cognitive modeling in linguistics; in the second guest lecture, José del R. Millán (EPFL) demonstrates a brain-computer interface.

The ShanghAI Lectures are a videoconference-based lecture series on Embodied Intelligence run by Rolf Pfeifer and organized by me and partners around the world.

Vera Zabotkina: Cognitive modeling in linguistics: conceptual metaphors

The concepts that govern our thought are not just matters of the intellect. They also govern our everyday functioning, down to the most mundane details. Our concepts structure what we perceive, how we get around in the world, and how we relate to other people. Our conceptual system thus plays a central role in defining our everyday realities. If we are right in suggesting that our conceptual system is largely metaphorical, then the way we think, what we experience, and what we do every day is very much a matter of metaphor… (Lakoff & Johnson, 1980)

In this lecture, Vera addresses the integration challenge facing cognitive science as an interdisciplinary endeavor. She highlights the interconnection between AI and Linguistics and discusses conceptual metaphors.

José del Millán: Brain-Computer Interfacing

In this lecture, José del R. Millán (EPFL) demonstrates the use of human brain signals to control devices, such as wheelchairs, and interact with our environment.

Related links:

- Slides (4.06 MB)

- ShanghAI Lectures website

- Book: How the Body Shapes the Way We Think

- George Lakoff and Mark Johnson, Metaphors we live by. Chicago: University of Chicago Press, 1980.

- Cliff Goddard, Semantic Analysis. A practical Introduction. Second edition. Oxford University Press, 2011.

- James Pustejovsky. The Generative Lexicon. Cambridge, MIT Press, 1995.